D3: Invasive Computing and HPC

Principal Investigators:

Prof. H.-J. Bungartz, Prof. M. Gerndt, Prof. M. Bader

Scientific Researchers:

Abstract

The research agenda of D3 is threefold: First, we consider numerical core routines widespread in both supercomputing and embedded applications with respect to an invasive enhancement. Second, we investigate advantages of invasive computing on HPC systems by integrating such invasive algorithms into real-life simulation scenarios. Third, concepts are developed to support invasion in standard HPC programming models on state-of-the-art HPC systems.

In the first funding phase, we demonstrated that invasion is a promising paradigm for a wide range of highly relevant numerical algorithms by defining requirements for a set of representative numerical building blocks. Furthermore, invasion allows for more flexible resource usage of HPC architectures by extending the standard programming model for shared-memory systems with invasive run-time mechanisms dynamically redistributing cores iOMP.

In the second funding phase, we developed an extension of the Message Passing Interface (MPI) for invasive programming. Four additional MPI routines allow to write malleable applications. The MPICH based implementation of iMPI was integrated with the widely used HPC batch system SLURM. Invasive MPI application can thus be run inside of a SLURM batch job on any HPC cluster. The Tsunami application as well as highly parallel inverse solver were implemented with iMPI and used in experiments on SuperMUC, the 6 Petaflop supercomputer at Leibniz Supercomputing Center in Garching.

In the third funding phase,the project will investigate the ability to dynamically enforce powerbounds on HPC systems with the help of invasive Resource management. It will develop an invasive checkpointing system that adapts to the application requirements by dynamically allocating or releasing nodes used for in-memory checkpoints. The focus on the application side will be on using the x86 implementation of the iRTSS on a cluser of Xeon Phi processors for tuning applications with respect to complex memory hierarchies.

Synopsis

The overarching goal of the third funding phase is to show that invasive computing can make a difference in HPC - not only for accelerating an individual application code, but also in terms of the daily practice of operation of large systems at supercomputing centres. This phase will leverage the results from Phase I and Phase II in the areas of invasive HPC applications and programming models.

One first big concern of current centres is power consumption, in particular the lack of predictability. Invasive computing can help to increase predictability and, thus, reduce the overall energy cost. Second, centres are worried about higher failure rates due to growing core numbers. Here, fast adaptive data recovery can be realised based on a resource-aware checkpointing infrastructure. Finally, novel manycore architectures will be deployed, increasing especially the complexity of efficiently handling the memory hierarchy. Invasion of memory layers with guidance by the application will allow for improved execution.

Approach

Enforcement of Power Corridor In addition to the overall energy consumption, power stability plays an important role in current and future HPC systems. Sudden changes in the power consumption of a large-scale system are dangerous for the electricity supply infrastructure. This has to be handled in hardware to allow for short response times. Power stability is also important for the pricing of electrical energy. Typically, the contracts between the electricity providers and the HPC centres are optimised for a predictable power draw. Using more or less power than predefined in the agreed power corridor increases the costs for the HPC centre. Furthermore, dynamic adaptation of the corridor on the demand of the power grid might be part of the contract and reduces the electricity costs further \cite{Chen:2014}. The computing centre can be seen as a significant reserve to balance power usage in the grid.

We will develop novel power-corridor-aware schedulers for the invasive resource management, that perform run-time verification and enforcement of the power corridor bounds. The invasive batch scheduler will select applications to run in the invasive partition not only with respect to execution and energy efficiency, but also with respect to the potential for controlling the power consumption. The invasive runtime scheduler will use this tuning potential of the running applications to enforce the power bounds of the system. When the power usages reaches the upper limit, the application's clock frequency can be reduced and resources can be removed from power demanding applications. If the power drops below the lower bound, applications with potential higher power usage can be assigned more resources as well as a higher clock frequency.

Invasive Checkpointing Fault tolerance is of high importance when the size of HPC systems (in terms of core numbers) keeps growing with increasing pace, since the huge amount of cores and other resources will lead to increasing failure rates of the systems. General strategies in HPC to provide fault tolerance are to perform redundant computations, to use fault-tolerant algorithms, and to follow a checkpoint restart approach. While redundant computation is much too costly and fault-tolerant algorithms are very rare, our focus will be on the checkpointing approach. Depending on the progress of the application, the availability of resources, and the overheads for checkpointing, periodic checkpoints of the current application state are saved. In case of a failure, the application can then be restarted based on the last checkpoint.

In contrast to existing checkpointing systems, our invasive checkpointing is a system-level infrastructure, which supports multiple applications with checkpointing requirements. This global support allows to carefully optimise the required resources, i.e., nodes, network bandwidth and storage capacity, across multiple applications. Resource-aware scheduling of checkpointing across applications will allow to reduce the amount of required resources significantly. iCheck will also be unique because it will support malleable jobs. It will leverage the idea of invasive resource management to dynamically adapt its own resources to the requirements of the jobs. Depending on the number of jobs requiring checkpointing, the amount of data and the frequency of checkpoints, nodes will be allocated providing memory and computing capacity for in-memory checkpoints, storage will be reserved, as well as bandwidth to the nodes and to storage resources will be managed.

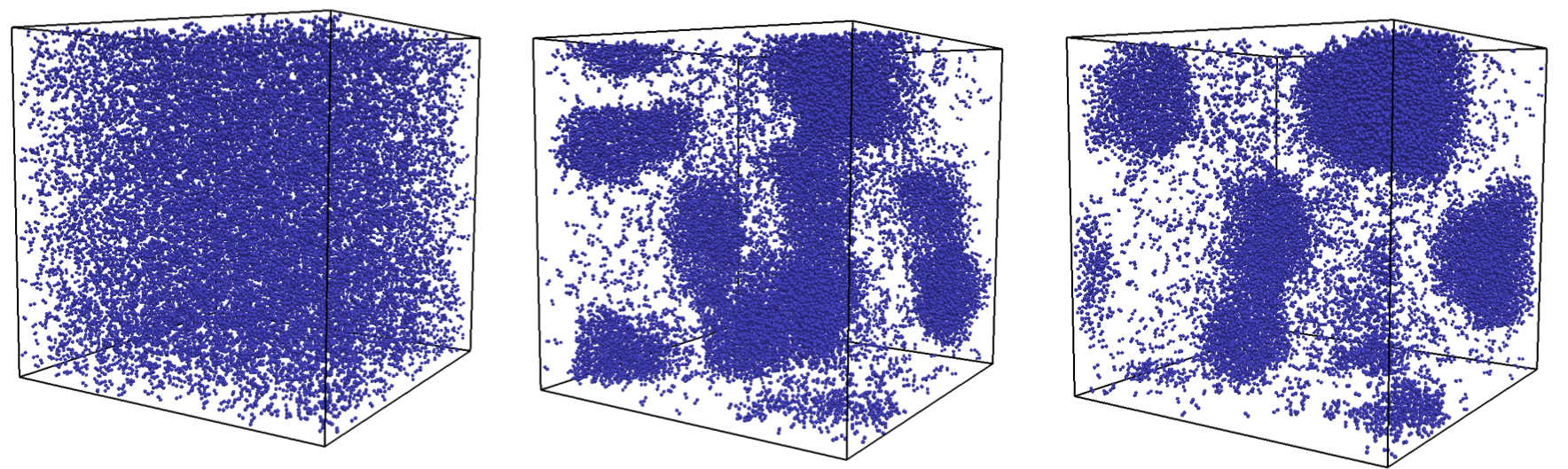

Invasion on Novel Architectures We will also investigate invasive computing on modern state-of-the-art manycore processors, in close collaboration with project C1. The focus will be on the Intel Xeon Scalable Processors with Persistent Memory. Intel Xeon Gold 6238 processors with Intel Optane Persistent Memory will be used for this investigation. The multiple memory architecture is a challenge on the system as well as on the application side. We will look into invasive applications for this architecture, starting with the molecular dynamics application ls1 MarDyn. The work will focus on fine-grained parallelism in HPC applications. We will look out for higher-level abstractions simplifying application development based on that API.

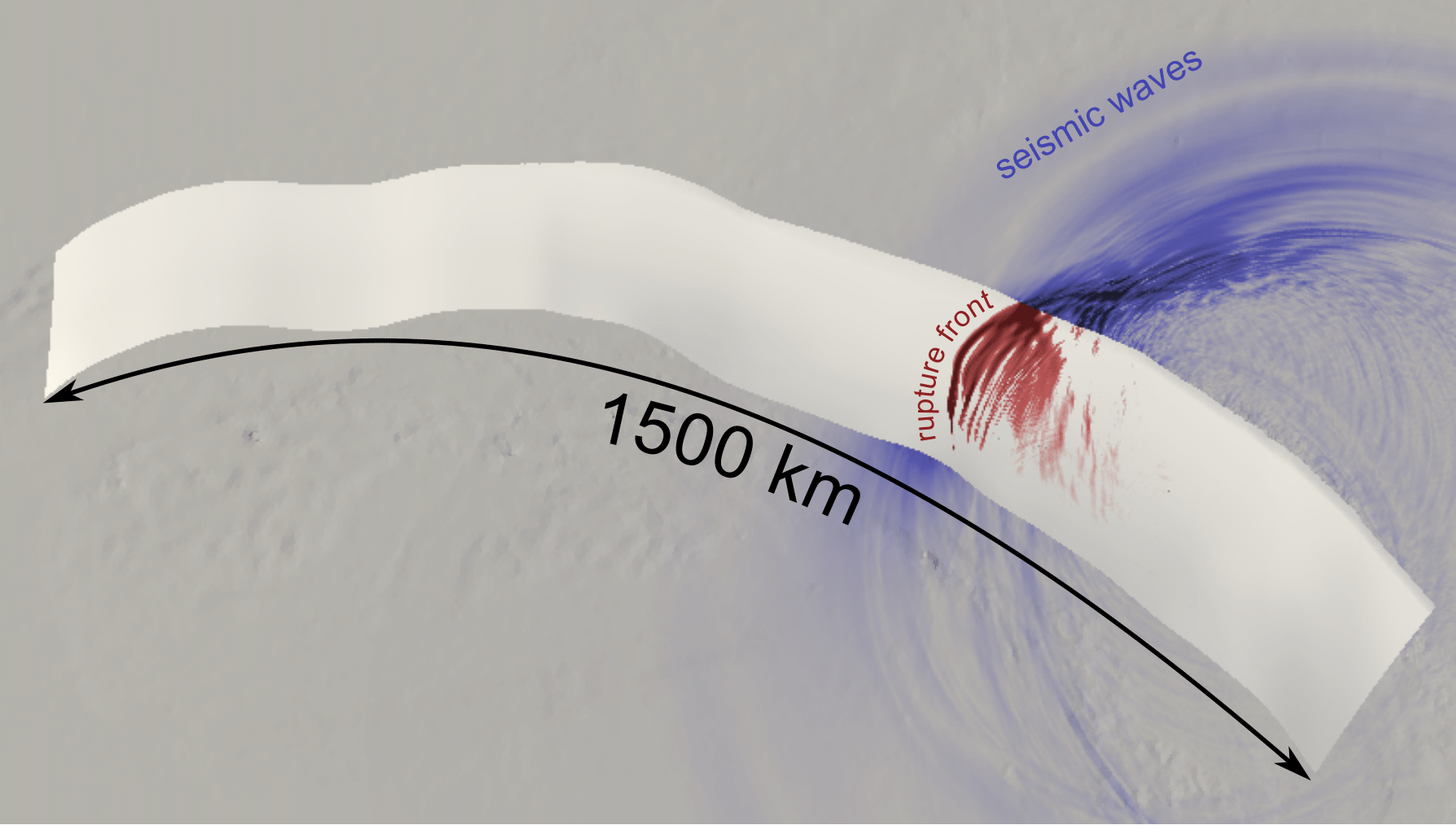

Invasion for Large-Scale Applications The project will continue our development efforts in invasifying large-scale, real-world applications that can fill systems such as the upcoming SuperMUC-NG, and that have a strong need for high dynamics. For that, we intend to focus on the invasification of the earthquake simulation code SeisSol and the molecular dynamics code ls1 MarDyn. In the case of SeisSol, for example, a large gain can be expected in rupture simulations, where most of the simulation time is characterised by the dynamics of the rupture process. Here, we intend to apply the lazy activation approach, and activate parallel partitions only once seismic waves reach a partition. Concerning ls1 MarDyn, one of our main simulation scenarios is nucleation processes in large-scale chemical reactors.

Snapshot of the rupture front and emanating seismic waves during a simulation of the Sumatra 2004 earthquake using SeisSol. The dynamic rupture process of the earthquake was initiated in the bottom right corner and takes a substantial part of the total simulation time to propagate through the entire domain.

ls1 MarDyn: nucleation and droplet formulation in an over-saturated fluid.

A comprehensive summary of the major achievements of the first and second funding phase can be found by accessing Project D3 first phase and Project D3 second phase websites.

Publications

| [1] | Nidhi Anantharajaiah, Tamim Asfour, Michael Bader, Lars Bauer, Jürgen Becker, Simon Bischof, Marcel Brand, Hans-Joachim Bungartz, Christian Eichler, Khalil Esper, Joachim Falk, Nael Fasfous, Felix Freiling, Andreas Fried, Michael Gerndt, Michael Glaß, Jeferson Gonzalez, Frank Hannig, Christian Heidorn, Jörg Henkel, Andreas Herkersdorf, Benedict Herzog, Jophin John, Timo Hönig, Felix Hundhausen, Heba Khdr, Tobias Langer, Oliver Lenke, Fabian Lesniak, Alexander Lindermayr, Alexandra Listl, Sebastian Maier, Nicole Megow, Marcel Mettler, Daniel Müller-Gritschneder, Hassan Nassar, Fabian Paus, Alexander Pöppl, Behnaz Pourmohseni, Jonas Rabenstein, Phillip Raffeck, Martin Rapp, Santiago Narváez Rivas, Mark Sagi, Franziska Schirrmacher, Ulf Schlichtmann, Florian Schmaus, Wolfgang Schröder-Preikschat, Tobias Schwarzer, Mohammed Bakr Sikal, Bertrand Simon, Gregor Snelting, Jan Spieck, Akshay Srivatsa, Walter Stechele, Jürgen Teich, Furkan Turan, Isaías A. Comprés Ureña, Ingrid Verbauwhede, Dominik Walter, Thomas Wild, Stefan Wildermann, Mario Wille, Michael Witterauf, and Li Zhang. Invasive Computing. FAU University Press, August 16, 2022. [ DOI ] |

| [2] | Xingfu Wu, Aniruddha Marathe, Siddhartha Jana, Ondrej Vysocky, Jophin John, Andrea Bartolini, Lubomir Riha, Michael Gerndt, Valerie Taylor, and Sridutt Bhalachandra. Toward an end-to-end auto-tuning framework in hpc powerstack. In Energy Efficient HPC State of Practice 2020, 2020. accepted for publication. |

| [3] | Mohak Chadha, Jophin John, and Michael Gerndt. Extending slurm for dynamic resource-aware adaptive batch scheduling. In IEEE International Conference on High Performance Computing, Data, and Analytics (HiPC) 2020, 2020. accepted for publication. |

| [4] | Ao Mo-Hellenbrand. Resource-Aware and Elastic Parallel Software Development for Distributed-Memory HPC Systems. Dissertation, Technische Universität München, Munich, 2019. [ http ] |

| [5] | Jophin John, Santiago Narvaez R, and Michael Gerndt. Invasive computing for power corridor management. In Proceedings of the ParCo 2019: International Conference on Parallel Computing, 2019. accepted for publication. |

| [6] | Mohak Chadha and Michael Gerndt. Modelling dvfs and ufs for region-based energy aware tuning of hpc applications. In IEEE International Parallel and Distributed Processing Symposium (IPDPS 2019), 2019. |

| [7] | Jeeta Ann Chacko, Isaías Alberto Comprés Ureña, and Michael Gerndt. Integration of apache spark with invasive resource manager. In 2019 IEEE SmartWorld, Ubiquitous Intelligence and Computing, Advanced and Trusted Computing, Scalable Computing and Communications, Cloud and Big Data Computing, Internet of People and Smart City Innovation, 2019. Best Paper Award. |

| [8] | Santiago Narvaez. Power model for resource-elastic applications. Master thesis, Technische Universität München, Munich, 2018. [ http ] |

| [9] | Carsten Uphoff, Sebastian Rettenberger, Michael Bader, Stephanie Wollherr, Thomas Ulrich, Elizabeth H. Madden, and Alice-Agnes Gabriel. Extreme scale multi-physics simulations of the tsunamigenic 2004 Sumatra megathrust earthquake. In SC17: The International Conference for High Performance Computing, Networking, Storage and Analysis Proceedings. ACM, 2017. [ DOI ] |

| [10] |

Ao Mo-Hellenbrand, Isaías Comprés, Oliver Meister, Hans-Joachim

Bungartz, Michael Gerndt, and Michael Bader.

A large-scale malleable tsunami simulation realized on an elastic

MPI infrastructure.

In Proceedings of the Computing Frontiers Conference (CF),

pages 271–274. ACM, 2017.

[ DOI ]

Keywords: Malleable Applications, Elastic Computing, Resource Aware Computing, Adaptive Mesh Refinement, Elastic MPI, Message Passing, SLURM, Autonomous Resource Management |

| [11] | Isaías Alberto Comprés Ureña. Resource-Elasticity Support for Distributed Memory HPC Applications. Dissertation, Technical University of Munich, Munich, 2017. [ arXiv | http ] |

| [12] | Jürgen Teich. Invasive computing – editorial. it – Information Technology, 58(6):263–265, November 24, 2016. [ DOI ] |

| [13] | Stefan Wildermann, Michael Bader, Lars Bauer, Marvin Damschen, Dirk Gabriel, Michael Gerndt, Michael Glaß, Jörg Henkel, Johny Paul, Alexander Pöppl, Sascha Roloff, Tobias Schwarzer, Gregor Snelting, Walter Stechele, Jürgen Teich, Andreas Weichslgartner, and Andreas Zwinkau. Invasive computing for timing-predictable stream processing on MPSoCs. it – Information Technology, 58(6):267–280, September 30, 2016. [ DOI ] |

| [14] |

Weifeng Liu, Michael Gerndt, and Bin Gong.

Model-based MPI-IO tuning with Periscope tuning framework.

Concurrency and Computation: Practice and Experience,

28(1):3–20, 2016.

[ DOI ]

Keywords: parallel I/O, automatic tuning, MPI-IO, performance model, high-performance computing |

| [15] | Hans Michael Gerndt, Michael Glaß, Sri Parameswaran, and Barry L. Rountree. Dark Silicon: From Embedded to HPC Systems (Dagstuhl Seminar 16052). Dagstuhl Reports, 6(1):224–244, 2016. [ DOI ] |

| [16] | Martin Schreiber, Christoph Riesinger, Tobias Neckel, Hans-Joachim Bungartz, and Alexander Breuer. Invasive compute balancing for applications with shared and hybrid parallelization. International Journal of Parallel Programming, September 2014. [ DOI ] |

| [17] | Carsten Tradowsky, Martin Schreiber, Malte Vesper, Ivan Domladovec, Maximilian Braun, Hans-Joachim Bungartz, and Jürgen Becker. Towards dynamic cache and bandwidth invasion. In Reconfigurable Computing: Architectures, Tools, and Applications, volume 8405 of Lecture Notes in Computer Science, pages 97–107. Springer International Publishing, April 2014. [ DOI ] |

| [18] | Martin Schreiber. Cluster-Based Parallelization of Simulations on Dynamically Adaptive Grids and Dynamic Resource Management. Dissertation, Institut für Informatik, Technische Universität München, January 2014. [ .pdf ] |

| [19] | Martin Schreiber, Tobias Weinzierl, and Hans-Joachim Bungartz. Sfc-based communication metadata encoding for adaptive mesh refinement. In Michael Bader, editor, Proceedings of the International Conference on Parallel Computing (ParCo), October 2013. |

| [20] | Martin Schreiber, Christoph Riesinger, Tobias Neckel, and Hans-Joachim Bungartz. Invasive compute balancing for applications with hybrid parallelization. In Proceedings of the International Symposium on Computer Architecture and High Performance Computing (SBAC-PAD). IEEE, October 2013. |

| [21] | Martin Schreiber, Tobias Weinzierl, and Hans-Joachim Bungartz. Cluster optimization and parallelization of simulations with dynamically adaptive grids. In Euro-Par 2013, August 2013. |

| [22] | Hans-Joachim Bungartz, Christoph Riesinger, Martin Schreiber, Gregor Snelting, and Andreas Zwinkau. Invasive computing in HPC with X10. In X10 Workshop (X10'13), X10 '13, pages 12–19, New York, NY, USA, 2013. ACM. [ DOI ] |

| [23] | Michael Gerndt, Andreas Hollmann, Marcel Meyer, Martin Schreiber, and Josef Weidendorfer. Invasive computing with iOMP. In Proceedings of the Forum on Specification and Design Languages (FDL), pages 225–231, September 2012. |

| [24] | Isaías A. Comprés Ureña, Michael Riepen, Michael Konow, and Michael Gerndt. Invasive MPI on intel's single-chip cloud computer. In Andreas Herkersdorf, Kay Römer, and Uwe Brinkschulte, editors, Proceedings of the 25th International Conference on Architecture of Computing System (ARCS), volume 7179 of Lecture Notes in Computer Science, pages 74–85. Springer, February 2012. [ DOI ] |

| [25] | Martin Schreiber, Hans-Joachim Bungartz, and Michael Bader. Shared memory parallelization of fully-adaptive simulations using a dynamic tree-split and -join approach. In Proceedings of HiPC 2012, pages 1–10. IEEE, 2012. |

| [26] |

Andreas Hollmann and Michael Gerndt.

Invasive computing: An application assisted resource management

approach.

In Victor Pankratius and Michael Philippsen, editors, Multicore

Software Engineering, Performance, and Tools, volume 7303 of Lecture

Notes in Computer Science, pages 82–85. Springer Berlin Heidelberg, 2012.

[ DOI ]

Keywords: resource management; resource awareness; numa; parallel programming; OpenMP |

| [27] | Michael Bader, Hans-Joachim Bungartz, and Martin Schreiber. Invasive computing on high performance shared memory systems. In Facing the Multicore-Challenge III, volume 7686 of Lecture Notes in Computer Science, pages 1–12, 2012. |

| [28] | Michael Bader, Hans-Joachim Bungartz, Michael Gerndt, Andreas Hollmann, and Josef Weidendorfer. Invasive programming as a concept for HPC. In Proceedings of the 10th IASTED International Conference on Parallel and Distributed Computing and Networks 2011 (PDCN), February 2011. [ DOI ] |

| [29] | Jürgen Teich, Jörg Henkel, Andreas Herkersdorf, Doris Schmitt-Landsiedel, Wolfgang Schröder-Preikschat, and Gregor Snelting. Invasive computing: An overview. In Michael Hübner and Jürgen Becker, editors, Multiprocessor System-on-Chip – Hardware Design and Tool Integration, pages 241–268. Springer, Berlin, Heidelberg, 2011. [ DOI ] |

| [30] | Hans-Joachim Bungartz, Bernhard Gatzhammer, Michael Lieb, Miriam Mehl, and Tobias Neckel. Towards multi-phase flow simulations in the PDE framework peano. Computational Mechanics, 48(3):365–376, 2011. [ .pdf ] |

| [31] | Jürgen Teich. Invasive algorithms and architectures. it - Information Technology, 50(5):300–310, 2008. |

| [32] | Andreas Hollmann and Michael Gerndt. Invasive computing: An application assisted resource management approach. In MSEPT, pages 82–85. [ DOI ] |

| [33] | Andreas Hollmann and Michael Gerndt. iOMP language specification 1.0. Internal Report. |

| [34] | Isaías A. Comprés Ureña and Michael Gerndt. Improved RCKMPI's SCCMPB channel: Scaling and dynamic processes support. 4th MARC Symposium. |