Events 2023

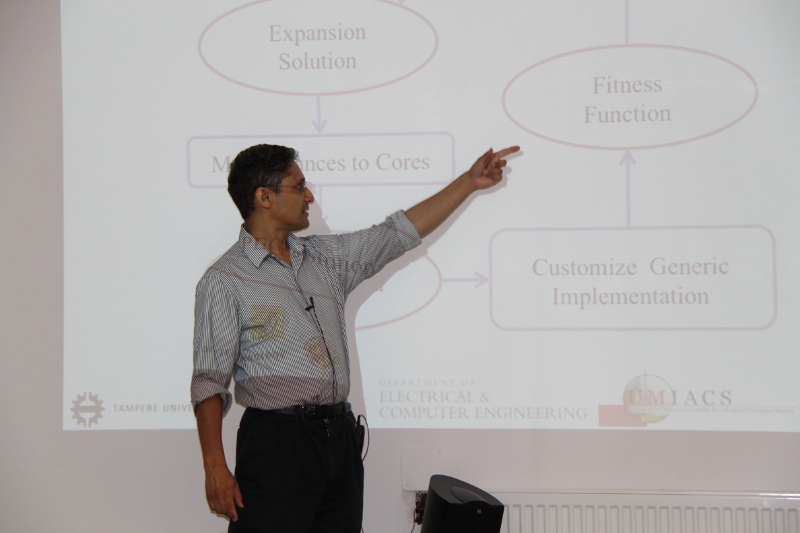

InvasIC Seminar, June 07, 2023 at FAU: An Agile and Explainable Exploration of Efficient HW/SW Codesigns of Deep Learning Accelerators Using Bottleneck Analysis

Prof. Aviral Shrivastava (Arizona State University).

Prof. Shrivastava with Prof. Jürgen Teich

Effective design space exploration (DSE) is paramount for hardware/software codesigns of deep learning accelerators that must meet strict execution constraints. For their vast search space, existing DSE techniques can require excessive number of trials to obtain valid and efficient solution because they rely on black-box explorations that do not reason about design inefficiencies. We propose Explainable-DSE – a framework for DSE of DNN accelerator codesigns using bottleneck analysis. By leveraging information about execution costs from bottleneck models, our DSE can identify the bottlenecks and therefore the reasons for design inefficiency and can therefore make mitigating acquisitions in further explorations. We describe the construction of such bottleneck models for DNN accelerator domain. We also propose an API for expressing such domain-specific models and integrating them into the DSE framework. Acquisitions of our DSE framework caters to multiple bottlenecks in executions of workloads like DNNs that contain different functions with diverse execution characteristics. Evaluations for recent computer vision and language models show that Explainable-DSE mostly explores effectual candidates, achieving codesigns of 6× lower latency in 47× fewer iterations vs. non-explainable techniques using evolutionary or ML-based optimizations. By taking minutes or tens of iterations, it enables opportunities for runtime DSEs.

Events 2022

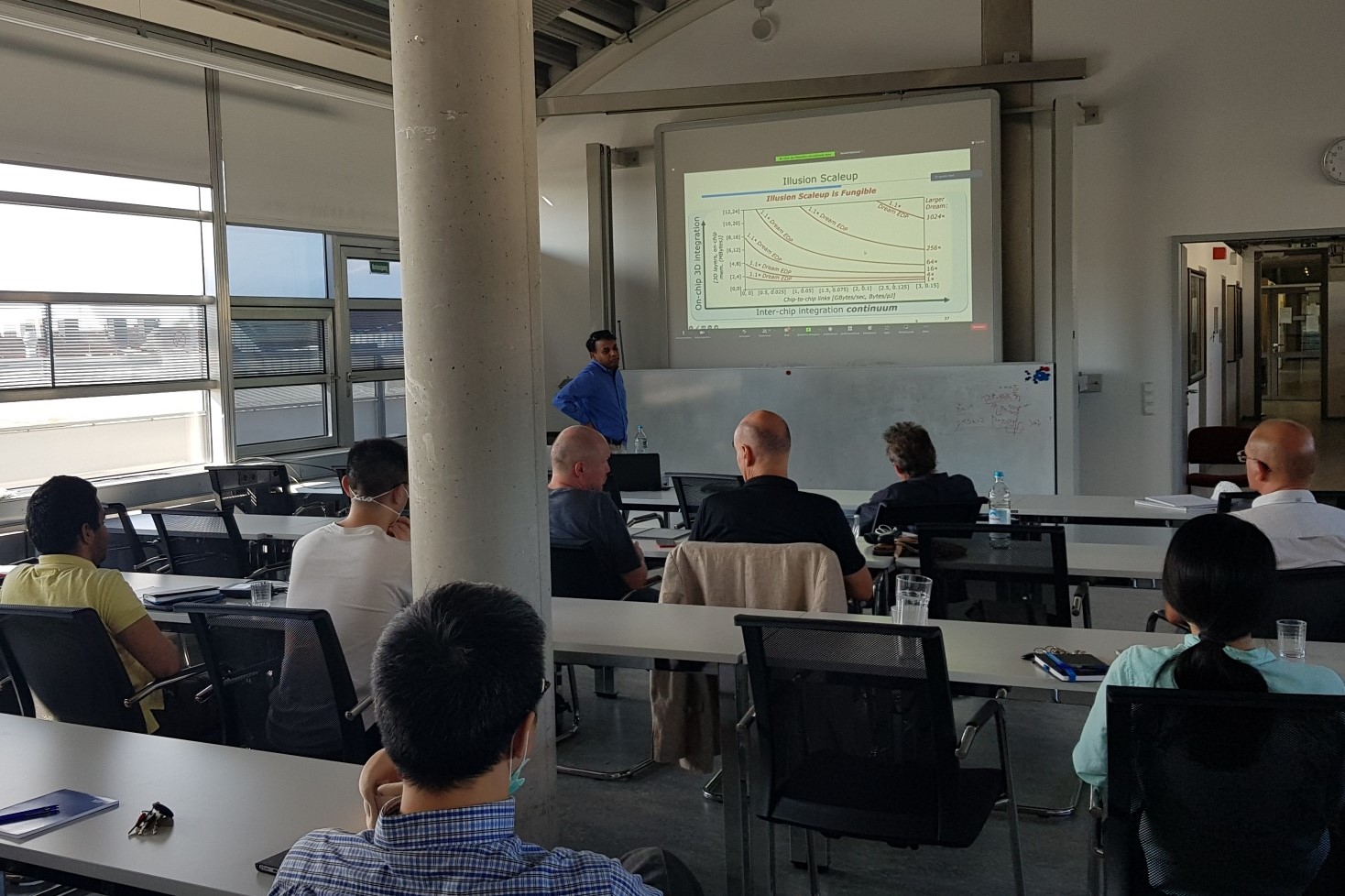

InvasIC Seminar, July 28, 2022 at TUM: The Future of Hardware Technologies for Computing: N3XT 3D MOSAIC, Illusion Scaleup, Co-Design

Prof. Subhasish Mitra (Stanford University).

Prof. Mitra at TUM

The computation demands of 21st-century abundant-data workloads, such as AI / machine learning, far exceed the capabilities of today’s computing systems. For example, a Dream AI Chip would ideally co-locate all memory and compute on a single chip, quickly accessible at low energy. Such Dream Chips aren’t realizable today. Computing systems instead use large off-chip memory and spend enormous time and energy shuttling data back-and-forth. This memory wall gets worse with growing problem sizes, especially as conventional transistor miniaturization gets increasingly difficult. The next leap in computing performance requires the next leap in integration. Just as integrated circuits brought together discrete components, this next level of integration must seamlessly fuse disparate parts of a system – e.g., compute, memory, inter-chip connections – synergistically for large energy and execution time benefits. This talk presented such transformative NanoSystems by exploiting the unique characteristics of emerging nanotechnologies and abundant-data workloads. We created new chip architectures through ultra-dense (e.g., monolithic) 3D integration of logic and memory – the N3XT 3D approach. Multiple N3XT 3D chips were integrated through a continuum of chip stacking/interposer/wafer-level integration — the N3XT 3D MOSAIC. To scale with growing problem sizes, new Illusion systems orchestrate workload execution on N3XT 3D MOSAIC creating an illusion of a Dream Chip with near-Dream energy and throughput. Beyond existing cloud-based training, we demonstrated the first non-volatile chips for accurate edge AI training (and inference) through new incremental training algorithms that are aware of underlying non-volatile memory technology constraints. Several hardware prototypes, built in industrial and research fabrication facilities, demonstrate the effectiveness of our approach. We targeted 1,000X system-level energy-delay-product benefits, especially for abundant-data workloads. We also addressed new ways of ensuring robust system operation despite growing challenges of design bugs, manufacturing defects, reliability failures, and security attacks.

InvasIC Seminar, July 7, 2022 at TUM: A Principled Approach for Accelerating AI Systems Innovations

Dr. Jinjun Xiong (University at Buffalo)

Dr. Jinjun Xiong at TUM

AI has become an increasingly powerful technology force that will transform different aspects of our society, such as transportation, healthcare, education, and scientific discovery. But the diverse layers of software abstractions, hardware heterogeneity, privacy and security concerns, and big-data driven machine learning models have made the development of optimized AI solutions extremely challenging. This talk discussed some of my related research efforts in the past decade on developing enterprise-scale high performance AI solutions and innovating related AI systems technologies, in particular, a data-driven reviewer recommendation AI solution, automation tools to identify system performance bottlenecks, and innovative software-hardware co-optimization algorithms for accelerating AI algorithms. I contextualized these efforts in my efforts of developing a principled approach for accelerating AI systems innovations. I also discussed a couple of other related AI research, for example, one on developing statistical neural networks inspired by my early work on statistical timing analysis, and one on developing a novel neural architecture search work.

InvasIC Seminar, May 27, 2022 at FAU: The FABulous Open Source eFPGA Framework

Prof. Dr. Dirk Koch (Universität Heidelberg)

This talk introduced the FABulous open source eFPGA framework, which was used in the design of multiple chips. FABulous deals with all the modeling, fabric synthesis, ASIC implementation, and simulation/emulation aspects, as well as the compilation of user bitstreams that will ultimately run on the generated fabrics. For this, FABulous is integrating several other open source tools, including Yosis, nextpnr, OpenRoad, and the Verilator. The talk also discussed some of the FPGA engineering challenges and present some of the recent chips, including an open-everything chip and a ReRAM (memristor) FPGA test chip.

InvasIC Seminar, May 20, 2022 at FAU: A RISC-V Experience Report

Prof. Dr. Michael Engel (Universität Bamberg)

The RISC-V architecture is one of the promising foundations for current and upcoming research projects as well as commercial products. It is especially attractive due to its open instruction-set architecture, which allows to create compliant implementations without having to license the use of specifications or IP implementations from a processor designer. In recent years, a whole ecosystem of open as well as commercial hardware and software solutions based on RISC-V has originated, with the vision of being able to create a completely open source computer system - from the first bit of hardware (description) to the last bit of software. After an overview of the RISC-V architecture and available components, in this talk, I discussed aspects of the RISC-V architecture and specifically its hardware/software interface relevant for system software. Based on previous projects to port system-level code together with my students, I analyzed intricacies of the interface RISC-V provides to system software as well as the different relevant instruction set extensions available and in development. Finally, I gave a glimpse on ongoing research in my group at Bamberg University and at NTNU which uses RISC-V to investigate novel approaches to hardware/software interfacing for systems with persistent main memory as well as distributed operating system environments for IoT applications.

Prof. Dr.-Ing. Jürgen Teich and Prof. Dr.-Ing Michael Engel

InvasIC Seminar, February 3, 2022, Virtual Talk: Hardware/Software Co-Design of Deep Learning Accelerators

Prof. Dr. Yiyu Shi (University of Notre Dame, USA)

The prevalence of deep neural networks today is supported by a variety of powerful hardware platforms including GPUs, FPGAs, and ASICs. A fundamental question lies in almost every implementation of deep neural networks: given a specific task, what is the optimal neural architecture and the tailor-made hardware in terms of accuracy and efficiency? Earlier approaches attempted to address this question through hardware-aware neural architecture search (NAS), where features of a fixed hardware design are taken into consideration when designing neural architectures. However, we believe that the best practice is through the simultaneous design of the neural architecture and the hardware to identify the best pairs that maximize both test accuracy and hardware efficiency. In this talk, we presented novel co-exploration frameworks for neural architecture and various hardware platforms including FPGA, NoC, ASIC and Computing-in-Memory, all of which are the first in the literature. We demonstrated that our co-exploration concept greatly opens up the design freedom and pushes forward the Pareto frontier between hardware efficiency and test accuracy for better design tradeoffs.

Prof. Dr.-Ing. Ulf Schlichtmann and Prof. Dr. Yihu Shi.

Events 2021

InvasIC Seminar, July 16, 2021, Virtual Talk: Hardware-Software Contracts for Safe and Secure Systems

Univ.-Prof. Dr. Jan Reineke (Universität des Saarlandes)

Trains, planes, and other safety- and security-critical systems that modern society relies on are controlled by computer systems, as is much of our critical infrastructure, including the power grid and cellular networks. But can we trust in the safety and security of these systems?

I argued that today's hardware-software abstractions, instruction set architectures (ISAs), are fundamentally inadequate for the development of safe or secure systems. Indeed, ISAs abstract from timing, making it impossible to develop safety-critical systems that have to satisfy real-time constraints on top of them. Neither do ISAs provide sufficient security guarantees, making it impossible to develop secure systems on top of them. As a consequence, engineers are forced to rely on brittle timing and security models that are proven wrong time and again, as evidenced e.g. by the recent Spectre attacks; putting our society at risk.

I proposed to tackle this problem at its root by introducing novel hardware-software contracts that extend the guarantees provided by ISAs to capture key non-functional properties. These hardware-software contracts will formally capture the expectations on correct hardware implementations and will lay the foundation for achieving safety and security guarantees as the software level. This will enable the systematic engineering of safe and secure hardware-software systems we can trust in.

InvasIC Seminar, June 11, 2021, Virtual Talk: Advancing Coarse-Grained Reconfigurable Array (CGRA) with Compiler/Architecture Co-design

Professor Tulika Mitra (National University of Singapore)

Prof. Dr.-Ing. Jürgen Teich and Prof. Dr. Tulika Mitra.

The Internet of Things (IoT) system architectures are rapidly moving towards edge computing where computational intelligence is embedded in the edge devices at or near the sensors instead of the remote cloud for time-sensitivity, security, privacy, and connectivity reasons. At present, the limited computing capability of power, area constrained edge devices severely restricts their potential. In this talk, I will present ultra-low power, Coarse-Grained Reconfigurable Array (CGRA) accelerators as a promising approach to offer close to fixed-functionality ASIC-like energy efficiency while supporting diverse applications through full compile-time configurability. The central challenge is efficient spatio-temporal mapping of the application expressed in high-level programming languages with complex control flow and memory accesses to a concrete CGRA accelerator by respecting its constraints. We approach this challenge through a synergistic hardware-software co-designed approach with (a) innovations at the accelerator architecture level to improve the efficiency of the application execution as well as ease the burden on compilation, and (b) innovations at the compiler level to fully exploit the architectural optimizations for efficient and fast mapping on the accelerator.

InvasIC Seminar, June 8, 2021, Virtual Talk: Adversarial Machine Learning in the Audio Domain

Professor Dr. Thorsten Holz (Ruhr-Universität)

The advent of Deep Learning has transformed our digital society. Starting with simple recommendation techniques or image recognition applications, machine-learning systems have evolved to play games at eye level with humans, identify faces, or recognize speech at the level of human listeners. These systems are now virtually ubiquitous, gaining access to critical and sensitive areas of our daily lives. On the downside, such algorithms are brittle and vulnerable to malicious input, so-called adversarial examples. In these evasion attacks, a targeted input is perturbed by imperceptible amounts of noise to trigger misclassification of the victim's neural network. In this talk, we provided an overview of some recent results in this area, with a focus on adversarial attacks against automatic speech recognition systems. Furthermore, we also sketched challenges for detecting deep fake images and similar kinds of artificially generated media.

InvasIC Seminar, May 14, 2021, Virtual Talk: Full-Stack Optimizations for Next-Generation Deep-Learning Accelerators

Professor Yakun Sophia Shao (University of California, Berkeley)

Machine learning is poised to substantially change society in the next 100 years, just as how electricity transformed the way industries functioned in the past century. In particular, deep learning has been adopted across a wide variety of industries, from computer vision, natural language processing, autonomous driving, to robotic manipulation. Motivated by the high computational requirement of deep learning, there has been a large number of novel deep-learning accelerators proposed in academia and industry to meet the performance and efficiency demands of deep-learning applications. In this talk, I provided an overview of challenges and opportunities for domain-specific architectures for deep learning. In particular, I discussed some of our recent efforts in building efficient deep-learning accelerators through full-stack optimizations at circuits, architecture, and compiler levels.

InvasIC Seminar, April 20, 2021, Virtual Talk: In Hardware We Trust? From TPMs to Enclave Computing on RISC-V

Professor Dr. Ahmad Sadeghi (TU Darmstadt)

The large attack surface of commodity operating systems has motivated academia and industry to develop novel security architectures that provide strong hardware-assisted protection for sensitive applications using the so-called enclaves. However, deployed enclave architectures often lack important security features, and assume threat models which do not cover cross-layer attacks, such as microarchitectural exploits and beyond. Thus, recent academic research has proposed a new line of enclave architectures with distinct features and more comprehensive threat models, many of which were developed on the open RISC-V architecture.

In this talk, we presented a brief overview of the Trusted Computing Landscape, its promises and pitfalls. We discussed selected RISC-V based enclave architectures recently proposed, discussed their features, limitations and open challenges which we aim to tackle in our current research using our security architecture CURE. Finally, we shortly reported on the insights we gained on cross-layers attacks in the world’s largest hardware security competitions franchise that we have been organizing with Intel and Texas AMU since 2018.

InvasIC Seminar, March 25, 2021, Virtual Talk: Cyber-Physical Systems Security: A Pacemaker Case Study

Partha Roop (University of Auckland)

Cyber-Physical attacks (CP attacks), originating in cyber space but damaging physical infrastructure, are a significant recent research focus. Such attacks have affected many Cyber-Physical Systems (CPSs) such as smart grids, intelligent transportation systems and medical devices. In this talk we considered techniques for the detection and mitigation of CP attacks on medical devices. It is obvious that such attacks have immense safety implication. Our work is based on formal methods, a class of mathematically founded techniques for the specification and verification of safety-critical systems. A cardiac pacemaker was our motivating medical device and we described its interaction with the heart. We then provided an overview of formal methods with particular emphasis on run-time based approaches, which are ideal for the design of security monitors. Subsequently, we discussed in detail two recent approaches developed in our group for attack detection and mitigation. We thus provided an overview of run-time based formal approaches for attack detection and mitigation of medical devices.

Events 2020

InvasIC Seminar, December 18, 2020, Virtual Talk: Sustained Performance Scaling in the Light of Bounded Growth and Technology Constraints

Prof. Holger Fröning (Universität Heidelberg)

The end of Moore's law has been predicted for many years, in combinations with statements about exaggerations of its end. However, we are currently observing a phase of substantial slow-down in key metrics associated with silicon device manufacturing, and, as a result, huge issues with sustained performance scaling. In this talk, we reviewed the most important recent and current trends in computer architecture, in particular in the light of diminishing returns from feature size scaling and power constraints of CMOS technology. Ultimately, we see that energy efficiency is and will be key for a continuous performance scaling, however in substantially different flavors. In this context, particular attention will be put on predictive models for performance and power, as well as a short review of our efforts in the context of the DeepChip project, and how both can help from a system's perspective for continued performance scaling.

InvasIC Seminar, December 11, 2020, Virtual Talk: Design of Low Power Hearing Aid Processors

Prof. Holger Blume (Leibniz Universität Hannover)

Processors for hearing aids are highly specialized for audio processing and they have to meet challenging hardware restrictions. This talk aims to provide an overview of the requirements, architectures and implementations of these processors. Special attention is paid to the increasingly important class of application specific instruction-set processors. The main focus of this talk lies on hardware-related aspects such as the processor architecture, the interfaces, the application-specific integrated circuit technology, and the operating conditions. The different hearing aid implementations are compared in terms of power consumption, silicon area, and computing performance for the algorithms used. Challenges for the design of future hearing aid processors being able to process AI-based hearing aid algorithms and EEG-enhanced hearing aid algorithms are discussed based on current trends and developments.

InvasIC Seminar, November 20, 2020, Virtual Talk: Journey from Mobile Platforms to Self-Powered Wearable Systems

Prof. Umit Ogras (Arizona State University, USA)

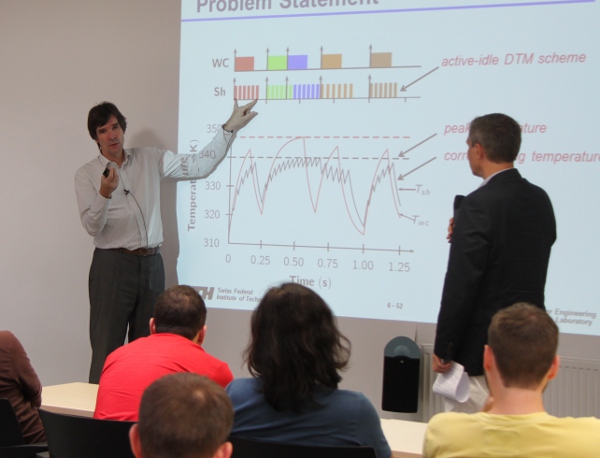

We experience a major shift in the form factor of electronic systems every 10 to 15 years. The most recent examples are the mobile platforms powered by heterogeneous system-on-chips (SoCs), while the next one is yet to dominate the market. Despite their impressive performance, mobile platforms still suffer from tight thermal constraints and resulting power consumption limitations. Furthermore, the complexity of current designs surpasses our ability to optimally control the power management knobs, such as the number, type and frequencies of active cores. The first part of this talk overviewed a few recent results on the modeling, analysis and optimization of power-temperature dynamics of multiprocessor SoCs. The accuracy and effectiveness of these approaches were demonstrated on commercial ARM and x86 (Atom) SoCs. In the second part, we overviewed “Systems-on-Polymer” as a candidate technology to drive the next wave of computing. This approach has recently been introduced to combine the advantages of flexible and traditional silicon technologies. Within this context, we presented a flexibility-aware design methodology, and a self-powered flexible prototype for wearable health monitoring.

Prof. Umit Ogras (Arizona State University, USA) spoke at the InvasIC Seminar.

InvasIC Seminar, January 7, 2020 at FAU: Code is Ethics — Formal Techniques for a Better World

Prof. Rolf Drechsler (University of Bremen/DFKI)

Prof. Dr.-Ing. Jürgen Teich, Prof. Drechsler and Prof. Dr. Oliver Keszöcze (from left).

Computers are involved in our every-day life, making increasingly consequential decisions. This raises the question of the ethics of these decisions, for example when autonomous cars are concerned. We argue that the ethics of the decisions taken by a computer are in fact those of the developers, encoded in the program ("code is ethics"). This encoding is mostly implicit — programmers and users are often even not aware of the implicit decisions that are being made before the program is even run. We suggest that formal methods are an excellent way to make the criteria under which these decisions are taken explicit, because formal specifications are more concise, abstract and clearer than code. This way, it becomes clear why systems act the way they do, and where the responsibility for their behaviour lies.

Events 2019

InvasIC Seminar, November 29, 2019 at FAU: Scalable Data Management on Modern Networks

Prof. Carsten Binnig (TU Darmstadt)

Prof. Carsten Binnig, Prof. Dr.-Ing. Jürgen Teich, Andreas Becher, Prof. Meyer-Wegener (from left).

As data processing evolves towards large scale, distributed platforms, the network will necessarily play a substantial role in achieving efficiency and performance. Modern high-speed networks such as InfiniBand, RoCE, or Omni-Path provide advanced features such as Remote-Direct-Memory-Access (RDMA) that have shown to improve the performance and scalability of distributed data processing systems. Furthermore, switches and network cards are becoming more flexible while programmability at all levels (aka, software-defined networks) opens up many possibilities to tailor the network to data processing applications and to push processing down to the network elements. In this talk, I discussed opportunities and presented our recent research results to redesign scalable data management systems for the capabilities of modern networks.

InvasIC Seminar, September 27, 2019 at FAU: Design of Decentralized Embedded IoT Systems

Prof. Sebastian Steinhorst (TUM)

With the Internet of Things (IoT), technological advancements in the area of digitalization are introduced in almost all sectors of industry and society. IoT system architectures are traditionally designed in a hierarchical and centralized fashion, which, however, can no longer keep up with the increasing requirements of scalability, manageability, efficiency and security of the vast amount of emerging applications. Hence, decentralization might be the only possibility to meet the requirements imposed on future IoT systems, eventually driving architecture design towards self-organizing systems. However, in system architectures composed of resource-constrained embedded IoT devices, existing decentralization methodologies significantly exceed the computational and communication capabilities of such devices. Therefore, this talk illustrated our progress in developing design methods and algorithms that contribute to efficient decentralization of embedded IoT devices in system architectures. The talk discussed how consensus efficiency can be improved in different application scenarios such as autonomous driving and Industry 4.0. For the latter, we are also targeting the challenge of interoperability and self-organization in decentralized industrial IoT architectures. In this context, our recent advancements to describe the capabilities of IoT devices in a semantically strong form such that devices will be able to interoperate and coordinate themselves by exchanging their capabilities were presented. Eventually, such devices will be enabled to collaborate autonomously in order to perform system-level functionality.

InvasIC Seminar, July 19, 2019 at FAU: Approximate Computing: Design & Test for Integrated Circuits

Prof. Alberto Bosio (Ecole Centrale de Lyon, France)

Prof. Alberto Bosio (on the right) and Prof. Dr.-Ing. Jürgen Teich (left).

Todays’ Integrated Circuits (ICs) are starting to reach the physical limits of CMOS technology. Among the multiple challenges, we can mention high leakage current (i.e., high static power consumption), reduced performance gain, reduced reliability, complex manufacturing processes leading to low yield and complex testing procedures, and extremely costly masks. In other words, ICs manufactured with the latest technology nodes are less and less efficient (w.r.t. both performance and energy consumption) than forecasted by the Moore's law. Moreover, manufactured devices are becoming less and less reliable, meaning that errors can appear during the normal lifetime of a device with a higher probability than with previous technology nodes. Fault tolerant mechanisms are therefore required to ensure the correct behavior of such devices at the cost of extra area, power and timing overheads. Finally, process variations force engineers to add extra guard bands (e.g., higher supply voltage or lower clock frequency than required under normal circumstances) to guarantee the correct functioning of manufactured devices.

In the recent years, the Approximate Computing (AxC) paradigm has emerged. AxC is based on the intuitive observation that, while performing exact computation requires a high amount of resources, allowing selective approximation or occasional violation of the specification can provide gains in efficiency (i.e., less power consumption, less area, higher manufacturing yield) without significantly affecting the output quality. This talk first discussed the basic concepts of the AxC paradigm and then focused on the Design & Test of Integrated Circuits for AxC-based systems. From the design point of view, a methodology able to automatically explore the impact of different approximation techniques on a given input algorithm was presented. The methodology consists is a design exploration tool able to find approximate versions for an algorithm described in software (i.e., coded in C/C++), by mutating the original code. The mutated (i.e., approximated) version of the algorithm is then synthetized by using a High-Level Synthesis tool to obtain a HDL code. The latter is finally mapped into an FPGA to estimate the benefits of the approximation in terms of area reduction and performances. Regarding the test of integrated circuits, we start from the consideration that AxC-based systems can intrinsically accept the presence of faulty hardware (i.e., hardware that can produce errors). This paradigm is also called "computing on unreliable hardware". The hardware-induced errors have to be analyzed to determine their propagation through the system layers and eventually determining their impact on the final application. In other words, an AxC-based system does not need to be built using defect-free ICs. Indeed, AxC-based systems can manage at higher-level the errors due to defectives ICs, or those errors simply do not significantly impact the final applications. Under this assumption, we can relax test and reliability constraints of the manufactured ICs. One of the ways to achieve this goal is to test only for a subset of faults instead of targeting all possible faults. In this way, we can reduce the manufacturing cost since we eventually reduce the number of test patterns and thus the test time.

InvasIC Seminar, May 17, 2019 at FAU: Optimizing Enterprise Servers across the Hardware and Software Stack

Dr. Silvia Melitta Mueller (IBM, Germany)

Enterprise servers are used for a wide range of business applications,

spanning from traditional banking, accounting, and insurance applications

to emerging workloads around Blockchain, security, and AI. All these

applications have a few aspects in common: they are compute intensive,

mission critical, and clients expect better performance and power

performance from one generation to the next. This can best be achieved by

co-optimizing hardware, compiler, and application.

Enterprise servers are used for a wide range of business applications,

spanning from traditional banking, accounting, and insurance applications

to emerging workloads around Blockchain, security, and AI. All these

applications have a few aspects in common: they are compute intensive,

mission critical, and clients expect better performance and power

performance from one generation to the next. This can best be achieved by

co-optimizing hardware, compiler, and application.

A big difference between traditional and emerging applications is the degree of freedom for this optimization. Emerging workloads attack new frontiers and have the freedom of first of a kind designs; being able to invent new data formats, system structures and algorithms. For traditional workloads, on the other hand, the systems got optimized over several decades, and it is hard to find still big enhancements.

The talk showed how IBM optimizes its systems based on two extreme: (1) pushing the frontiers of Deep Learning / AI with ultra-low-precision arithmetic (2) speeding up banking applications by optimized arithmetic

InvasIC Seminar, May 17, 2019 at TUM: On the road to Self-Driving IC Design Tools and Flows

Prof. Andrew B. Kahng (UC San Diego)

At today’s leading edge, critical scaling levers for semiconductor product companies include design cost, quality, and schedule. To reduce time and effort in IC implementation, fundamental challenges must be solved. First, the need for

(expensive) humans must be removed wherever possible. Humans are skilled at predicting downstream flow failures, evaluating key early decisions such as RTL floorplanning, and deciding tool/flow options to apply to a given design.

Achieving human-quality prediction, evaluation and decision-making will require new machine learning-centric models across designs, optimization heuristics, and EDA tools/flows. Second, to reduce design schedule, focus must return to the

long-held dream of single-pass design.

Future design tools and flows that never require iteration and never fail (but, without undue conservatism) demand new paradigms and core algorithms for parallel search in design automation. Third, learning-based models of tools and flows

must continually improve with additional design experiences. Therefore, the EDA and design ecosystem must deploy new infrastructure for machine learning model development and sharing.

At today’s leading edge, critical scaling levers for semiconductor product companies include design cost, quality, and schedule. To reduce time and effort in IC implementation, fundamental challenges must be solved. First, the need for

(expensive) humans must be removed wherever possible. Humans are skilled at predicting downstream flow failures, evaluating key early decisions such as RTL floorplanning, and deciding tool/flow options to apply to a given design.

Achieving human-quality prediction, evaluation and decision-making will require new machine learning-centric models across designs, optimization heuristics, and EDA tools/flows. Second, to reduce design schedule, focus must return to the

long-held dream of single-pass design.

Future design tools and flows that never require iteration and never fail (but, without undue conservatism) demand new paradigms and core algorithms for parallel search in design automation. Third, learning-based models of tools and flows

must continually improve with additional design experiences. Therefore, the EDA and design ecosystem must deploy new infrastructure for machine learning model development and sharing.

At UCSD, a recently launched U.S. DARPA project, OpenROAD (“Foundations and Realization of Open, Accessible Design”), seeks to develop electronic chip design automation tools for 24-hour, “no-human-in-the-loop” hardware layout generation. The project aims to combine several new approaches in chip design — machine learning and parallel optimization, along with "extreme partitioning" of the design problem — to develop a fast, autonomous design process. Today’s talk will sketch a few waypoints "on the road to self-driving IC design tools and flows", framed as opportunities for collaborations that span machine learning, IC design, EDA and academia.

Events 2018

InvasIC Seminar, November 16, 2018 at TUM: Algebraic Statistical Static Timing

Dr. Sani Nassif (Radyalis, USA)

One of the techniques for understanding the impact of process variability on digital circuit robustness and performance is statistical static timing analysis. Early in its development,

SSTA was expressed in terms of paths through the circuit, but later research has been focused on block-based analysis. This talk revisits this topic framed in reference to analysis accuracy and

overall performance. We show that a path-based approach lends itself well to more accurate models as well as a high-performance implementation based on high performance BLAS libraries.

Invitation

One of the techniques for understanding the impact of process variability on digital circuit robustness and performance is statistical static timing analysis. Early in its development,

SSTA was expressed in terms of paths through the circuit, but later research has been focused on block-based analysis. This talk revisits this topic framed in reference to analysis accuracy and

overall performance. We show that a path-based approach lends itself well to more accurate models as well as a high-performance implementation based on high performance BLAS libraries.

Invitation

InvasIC Seminar, September 20, 2018 at TUM: Comparing Voltage Adaptation Performance between Replica and In-Situ Timing Monitors

Prof. Masanori Hashimoto (Osaka University, Japan)

Adaptive voltage scaling (AVS) is a promising approach to over- come manufacturing variability, dynamic environmental fluctuation, and aging. This talk focused on timing sensors necessary

for AVS implementation and compares in-situ timing error predictive FF (TEP-FF) and critical path replica in terms of how much volt- age margin can be reduced. For estimating the theoretical

bound of ideal AVS, this work proposes linear programming based minimum supply voltage analysis and discusses the voltage adaptation performance quantitatively by investigating the gap between

the lower bound and actual supply voltages. Experimental results show that TEP-FF based AVS and replica based AVS achieve up to 13.3% and 8.9% supply voltage reduction, respectively while satisfying

the target MTTF. AVS with TEP-FF tracks the theoretical bound with 2.5 to 5.6 % voltage margin while AVS with replica needs 7.2 to 9.9 % margin.

Invitation

Adaptive voltage scaling (AVS) is a promising approach to over- come manufacturing variability, dynamic environmental fluctuation, and aging. This talk focused on timing sensors necessary

for AVS implementation and compares in-situ timing error predictive FF (TEP-FF) and critical path replica in terms of how much volt- age margin can be reduced. For estimating the theoretical

bound of ideal AVS, this work proposes linear programming based minimum supply voltage analysis and discusses the voltage adaptation performance quantitatively by investigating the gap between

the lower bound and actual supply voltages. Experimental results show that TEP-FF based AVS and replica based AVS achieve up to 13.3% and 8.9% supply voltage reduction, respectively while satisfying

the target MTTF. AVS with TEP-FF tracks the theoretical bound with 2.5 to 5.6 % voltage margin while AVS with replica needs 7.2 to 9.9 % margin.

Invitation

InvasIC Seminar, August 31, 2018 at TUM: Routability Prediction in Early Placement Stages using Convolution Neural Networks

Assoc. Prof. Shao-Yun Fang (National Taiwan University of Science and Technology)

With the dramatic shrinking of feature size and the advance of semiconductor technology nodes, numerous and complicated design rules need to be followed, and a chip design can only be taped-out

after passing design rule check (DRC). The high design complexity seriously deteriorates design routability, which can be measured by the number of DRC violations (DRVs) after the detailed routing

stage. Early routability prediction helps designers and tools perform preventive measures so that DRVs can be avoided in a proactive manner. Thanks to the great advance of multi-core systems, machine

learning has many amazing advances in the recent years. In this talk, research methodologies leveraging convolutional neural network (CNN) for DRV prediction in both the macro placement and cell

placement stages will be introduced.

Invitation

With the dramatic shrinking of feature size and the advance of semiconductor technology nodes, numerous and complicated design rules need to be followed, and a chip design can only be taped-out

after passing design rule check (DRC). The high design complexity seriously deteriorates design routability, which can be measured by the number of DRC violations (DRVs) after the detailed routing

stage. Early routability prediction helps designers and tools perform preventive measures so that DRVs can be avoided in a proactive manner. Thanks to the great advance of multi-core systems, machine

learning has many amazing advances in the recent years. In this talk, research methodologies leveraging convolutional neural network (CNN) for DRV prediction in both the macro placement and cell

placement stages will be introduced.

Invitation

InvasIC Seminar, July 17, 2018 at FAU: Designing Static and Dynamic Software Systems - A SystemJ Perspective

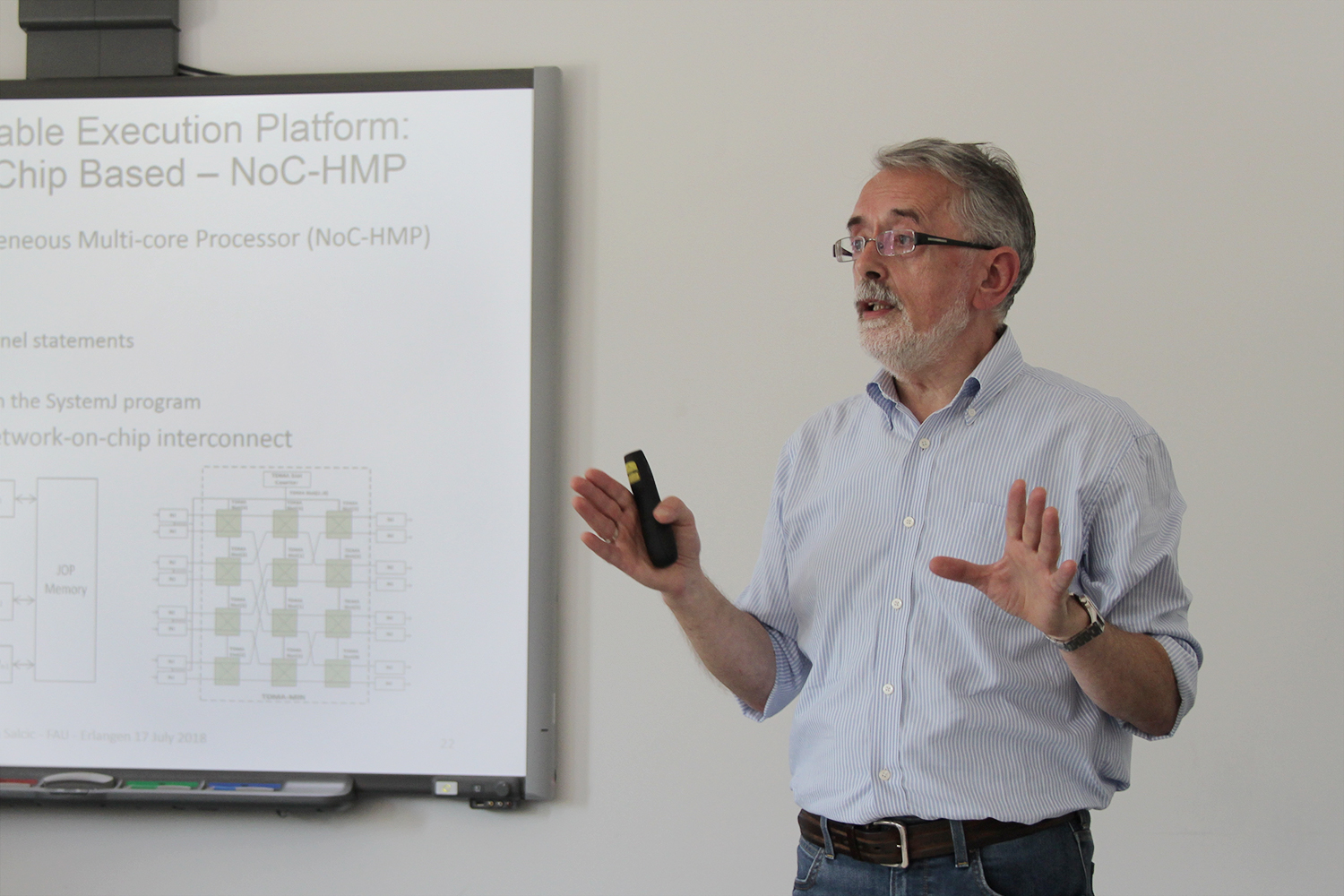

Prof. Zoran Salcic (The University of Auckland, New Zealand)

Embedded and cyber-physical systems involve hardware and software design and are becoming increasingly software-centric. Traditional programming languages such as C/C++ and Java have

been used as the primary languages in designing these systems, where programming has been typically extended with run-time concepts that allow designers to employ concurrency (e.g. by

using real-time operating systems or other types of run-time support). This led to plethora of techniques to structure and run those systems and satisfy various functional and other

requirements. However, structuring of software underpinned by formal, mathematical models, remained on a side-track.

In this talk we presented an approach to software systems design underpinned with a formal model of computation (MoC), in our case Globally Asynchronous Locally Synchronous

(GALS), which naturally models huge number of embedded and cyber-physical systems, as well as emerging software systems that execute on distributed (networked) platforms. Based on GALS

MoC as the central theme, we developed a system-level programming language SystemJ that allows designers to design concurrent GALS software systems in a seamless way. Moreover, the

approach still preserves benefits of using a standard programming language, Java in SystemJ case. Originally aimed at the design of static concurrent programs/systems, the approach has

been extended in two major directions, (1) (hard) real-time systems when time-predictable execution platforms are used and (2) dynamic, reconfigurable software systems, where the number

of concurrent software behaviours varies over time of system operation.

Embedded and cyber-physical systems involve hardware and software design and are becoming increasingly software-centric. Traditional programming languages such as C/C++ and Java have

been used as the primary languages in designing these systems, where programming has been typically extended with run-time concepts that allow designers to employ concurrency (e.g. by

using real-time operating systems or other types of run-time support). This led to plethora of techniques to structure and run those systems and satisfy various functional and other

requirements. However, structuring of software underpinned by formal, mathematical models, remained on a side-track.

In this talk we presented an approach to software systems design underpinned with a formal model of computation (MoC), in our case Globally Asynchronous Locally Synchronous

(GALS), which naturally models huge number of embedded and cyber-physical systems, as well as emerging software systems that execute on distributed (networked) platforms. Based on GALS

MoC as the central theme, we developed a system-level programming language SystemJ that allows designers to design concurrent GALS software systems in a seamless way. Moreover, the

approach still preserves benefits of using a standard programming language, Java in SystemJ case. Originally aimed at the design of static concurrent programs/systems, the approach has

been extended in two major directions, (1) (hard) real-time systems when time-predictable execution platforms are used and (2) dynamic, reconfigurable software systems, where the number

of concurrent software behaviours varies over time of system operation.

InvasIC Seminar, July 17, 2018 at TUM: Memristive Electronics

Prof. Sung-Mo (Steve) Kang (Jack Baskin School of Engineering, UC Santa Cruz)

The amount of data increased in the last two years alone accounts for ten percent of the total data available today. The sensing and acquisition, storage, and analysis of data are continually posing great challenges. The recent phenomenal advancement of artificial intelligence (AI) based on machine learning (ML) has found significant applications in many fields such as autonomous vehicle, medicine, and manufacturing. Machine learning and thus artificial intelligence can benefit from neuromorphic circuits and systems that are biologically-inspired. In this talk we highlighted the hardware-software synergy, memristor-based electronics and applications for storage and emulation of neuronal behaviors, synaptic interconnects, and neuromorphic computing. Historical perspective including Moore’s law, More than Moores, the current state-of-the-art in nanoelectronics, and future challenges in the era of the fourth industrial revolution were also discussed.

InvasIC Seminar, July 12, 2018 at FAU: Interference analysis with models for multi-core platform, AURIX TC27x - the case study

Wei-Tsun Sun PhD (IRT Antoine de Saint Exupéry)

Interferences between cores have to be taken into account when performing timing analysis for multi-copre platforms. This talk presents an approach to carry out interference analysis from available data-sheet(s) of a given platform. A description model is firstly captured from the data-sheet, and then is translated to AADL model. The AADL model is then transform to Prolog predicates. STRANGE, a tool written in Prolog is used to extract structural information from the predicates, and is also able to detect all potential interferences. We use AURIX TC27x as the show-case in this document to demonstrate how the proposed methodology can be applied, which enables the possibilities of being adapted for the other architectures.

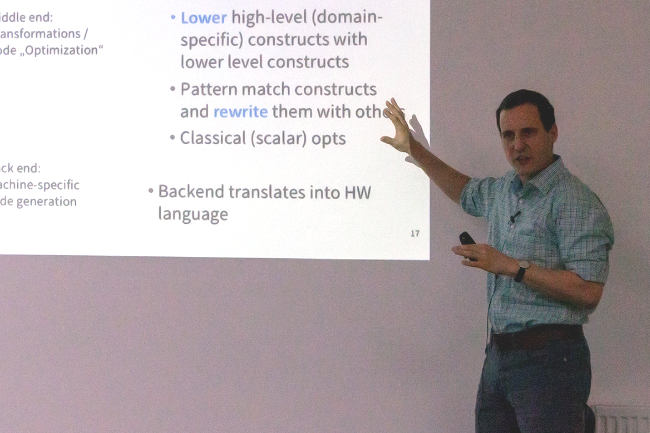

InvasIC Seminar, July 5, 2018 at FAU: AnyDSL: A Partial Evaluation Framework for Programming High-Performance Libraries

Prof. Sebastian Hack (Universität des Saarlandes)

Writing performance-critical software productively is still a challenging task because performance usually conflicts genericity. Genericity makes programmers productive as it

allows them to separate their software into components that can be exchanged and reused independently from each other. To achieve performance however, it is mandatory to instantiate

the code with algorithmic variants and parameters that stem from the application domain, and tailor the code towards the target architecture. This requires pervasive changes to the

code that destroy genericity.

In this talk, I advocated programming high-performance code using partial evaluation and present AnyDSL, a clean-slate programming system with a simple, annotation-based, online

partial evaluator. I showed that AnyDSL can be used to productively implement high-performance codes from various different domains in a generic way map them to different target

architectures (CPUs with SIMD units, GPUs). Thereby, the code generated using AnyDSL achieves performance that is in the range of multi man-year, industry-grade, manually-optimized

expert codes and highy-optimized code generated from domains specific languages.

Writing performance-critical software productively is still a challenging task because performance usually conflicts genericity. Genericity makes programmers productive as it

allows them to separate their software into components that can be exchanged and reused independently from each other. To achieve performance however, it is mandatory to instantiate

the code with algorithmic variants and parameters that stem from the application domain, and tailor the code towards the target architecture. This requires pervasive changes to the

code that destroy genericity.

In this talk, I advocated programming high-performance code using partial evaluation and present AnyDSL, a clean-slate programming system with a simple, annotation-based, online

partial evaluator. I showed that AnyDSL can be used to productively implement high-performance codes from various different domains in a generic way map them to different target

architectures (CPUs with SIMD units, GPUs). Thereby, the code generated using AnyDSL achieves performance that is in the range of multi man-year, industry-grade, manually-optimized

expert codes and highy-optimized code generated from domains specific languages.

InvasIC Seminar, June 15, 2018 at FAU: Cross Media File Storage with Strata

Prof. Simon Peter (The University of Texas at Austin, USA)

Current hardware and application storage trends put immense pressure on the operating system's storage subsystem. On the hardware side, the market for storage devices has diversified to a multi-layer storage topology spanning multiple orders of magnitude in cost and performance. Applications increasingly need to process small, random IO on vast data sets with low latency, high throughput, and simple crash consistency. File systems designed for a single storage layer cannot support all of these demands together. In this talk, I characterize these hardware and software trends and then present Strata, a cross-media file system that leverages the strengths of one storage medium to compensate for weaknesses of another. In doing so, Strata provides performance, capacity, and a simple, synchronous IO model all at once, while having a simpler design than that of file systems constrained by a single storage device. At its heart, Strata uses a log-structured approach with a novel split of responsibilities among user mode, kernel, and storage layers that separates the concerns of scalable, high-performance persistence from storage layer management. On common server workloads, Strata achieves up to 2.6x better IO latency and throughput than the state-of-the-art in low-latency and cross media file systems.

InvasIC Seminar, June 6, 2018 at FAU: Hardware Isolation Framework for Security Mitigation in FPGA-Based Cloud Computing

Prof. Christophe Bobda (University of Arkansas, USA)

The fast integration of FPGA in computing systems (desktop, embedded, cloud and data center) is pushing resources sharing directly in the hardware, away from the operating system. Cloud computing systems is one example where FPGAs are provided as resources that can be share among several tenants. In an infrastructure as a service (IaaS) paradigm, each tenant can access the hardware directly to accelerate some computations as custom circuits in one or more FPGAs. While these systems introduce application programmers to the energy, flexibility, and performance benefits of FPGAs, integrating FPGAs as shared resources into existing clouds pose new security challenges. The sharing of FPGA resources among cloud tenants can lead to scenarios where accelerators are misused as potential covert channels among guests who reside in different security contexts. Among the ten paradigms (Deception, Separation, Diversity, Consistency, Depth, Discretion, Collection, Correlation, Awareness, Response) used to address security vulnerabilities, separation is one of the most effective approach. The Operating systems’ separation kernels have successfully implemented separation at the software level to isolate application-level threads in separate execution domain and contain potential damages caused by malicious components. We hypothesize that computing systems that extend resource sharing to the hardware, such as FPGAs, can be better protected by providing efficient isolation infrastructure that extends system software separation to hardware components. The talk discussed a new security framework that allows controlled sharing and isolated execution of mutually distrusted accelerators in heterogeneous cloud systems. The proposed framework enables the accelerators to transparently inherit software security policies of the virtual machines processes calling them during runtime. This capability allows the system security policies enforcement mechanism to propagate access privilege boundaries expressed at the hypervisor level down to individual hardware accelerators. Furthermore, we present a software/hardware implementation of the proposed security framework that easily and transparently integrates in the hypervisors of today’s cloud systems. Evaluation of security performance and guest VMs execution overhead introduced by the implementation prototype is shows that the proposed framework provides isolated accelerators execution with almost zero execution overhead on guest VMs applications.

InvasIC Seminar, March 26, 2018 at TUM: Opportunities and Challenges of Silicon Photonics for Computing Systems

Prof. Jiang Xu (Hong Kong University of Science and Technology)

Computing systems, from HPC and data center to automobile, aircraft, and cellphone, are integrating growing numbers of processors, accelerators, memories, and peripherals to meet the burgeoning performance requirements of new applications under tight cost, energy, thermal, space, and weight constraints. Recent advances in photonics technologies promise ultra-high bandwidth, low latency, and great energy efficiency to alleviate the inter/intra-rack, inter/intra-board, and inter/intra-chip communication bottlenecks in computing systems. Silicon photonics technologies piggyback onto developed silicon fabrication processes to provide viable and cost-effective solutions. A large number of silicon photonics devices and circuits have been demonstrated in CMOS-compatible fabrication processes. Silicon photonics technologies open up new opportunities for applications, architectures, design techniques, and design automation tools to fully explore new approaches and address the challenges of next-generation computing systems. This talk will present our recent works on holistic comparisons of optical and electrical interconnects, unified optical network-on-chip, memory optical interconnect network, high-radix optical switching fabric for data centers, and etc.

InvasIC Seminar, March 16, 2018 at TUM: Self-Awareness for Heterogeneous MPSoCs: A Case Study using Adaptive, Reflective Middleware

Prof. Nikil Dutt (University of California, Irvine)

Self-awareness has a long history in biology, psychology, medicine, engineering and (more recently) computing. In the past decade this has inspired new self-aware strategies for emerging computing substrates (e.g., complex heterogeneous MPSoCs) that must cope with the (often conflicting) challenges of resiliency, energy, heat, cost, performance, security, etc. in the face of highly dynamic operational behaviors and environmental conditions. Earlier we had championed the concept of CyberPhysical-Systems-on-Chip (CPSoC), a new class of sensor-actuator rich many-core computing platforms that intrinsically couples on-chip and cross-layer sensing and actuation to enable self-awareness. Unlike traditional MPSoCs, CPSoC is distinguished by an intelligent co-design of the control, communication, and computing (C3) system that interacts with the physical environment in real-time in order to modify the system’s behavior so as to adaptively achieve desired objectives and Quality-of-Service (QoS). The CPSoC design paradigm enables self-awareness (i.e., the ability of the system to observe its own internal and external behaviors such that it is capable of making judicious decision) and (opportunistic) adaptation using the concept of cross-layer physical and virtual sensing and actuations applied across different layers of the hardware/software system stack. The closed loop control used for adaptation to dynamic variation -- commonly known as the observe-decide-act (ODA) loop -- is implemented using an adaptive, reflective middleware layer. In this talk I presented a case study of this adaptive, reflective middleware layer using a holistic approach for performing resource allocation decisions and power management by leveraging concepts from reflective software. Reflection enables dynamic adaptation based on both external feedback and introspection (i.e., self-assessment). In our context, this translates into performing resource management actuation considering both sensing information (e.g., readings from performance counters, power sensors, etc.) to assess the current system state, as well as models to predict the behavior of other system components before performing an action. I summarized results leveraging our adaptive-reflective middleware toolchain to i) perform energy-efficient task mapping on heterogeneous architectures, ii) explore the design space of novel HMP architectures, and iii) extend the lifetime of mobile devices.

Events 2017

InvasIC Seminar, October 12, 2017 at TUM: High Performance Computing for Real-Time Applications: A Case Study involving Space-craft Descent on a Planetary Surface

Prof. Amitava Gupta (Jadavpur University, Kolkata, India)

Space-crafts designed for planetary missions often involve a probe that separates from an orbiter and lands on the planetary surface. Typical

examples of these are the NASA rovers on the Mars and the early lunar missions by the United States and the Soviet Union and in the present

day the attempted descent by the ESA Schiaparelli. Such space-crafts are typically Lander modules which are smaller crafts with limited computing

power and power resources and are endowed with autonomous systems that use imaging to navigate a descent.

Navigation of a lander module starts with an initial hazard map which is a map of the terrain taken from an orbiter hundreds and sometimes

thousands of kilometers above the planetary surface with resolutions as large as several hundred metres. Loading the lander with a more detailed

hazard map poses a constraint in terms of the weight of the imaging equipment and hence the payload to be carried. An alternative approach

is vision guided descent where the onboard imaging equipment associated with the lander progressively refine the terrain image and correct the

lander’s trajectory to identify a suitable landing spot, thus translating the problem to the realm of vision guided control.

Experiments on vision guided landing of toy quadcopters have been successful and is a relatively easier problem as the control algorithm

is not constrained by the limitation that the lander’s displacement can only be towards the planet during descent, unlike a quadcopter, and thus

movements in X-Y direction can be achieved along specific X,Y,Z trajectories depending on the lander’s velocity. This is a tricky job

and requires fast computation with timing and computing power constraints. Thus, this is much different from a quadcopter landing

problem and encompasses several open areas of research like development optimization for processing power and the constraints imposed by the

quantum of change that can be handed by the image processing algorithm on the trajectory control algorithm etc. to name only a few, and thus

links the problem to the realm of High Performance Computing (HPC). Seamless recovery from a processing element failure with migration of

data is another aspect of this problem intimately linked to HPC.

The talk introduced the problem, the motivation of the HPC approach to solve the same and then moved on to identify the processing

elements, connectivity technology and algorithms spanning diverse domains such as image processing, HPC and Embedded Systems and finally

brought out the possibilities of a collaborative research initiative.

Space-crafts designed for planetary missions often involve a probe that separates from an orbiter and lands on the planetary surface. Typical

examples of these are the NASA rovers on the Mars and the early lunar missions by the United States and the Soviet Union and in the present

day the attempted descent by the ESA Schiaparelli. Such space-crafts are typically Lander modules which are smaller crafts with limited computing

power and power resources and are endowed with autonomous systems that use imaging to navigate a descent.

Navigation of a lander module starts with an initial hazard map which is a map of the terrain taken from an orbiter hundreds and sometimes

thousands of kilometers above the planetary surface with resolutions as large as several hundred metres. Loading the lander with a more detailed

hazard map poses a constraint in terms of the weight of the imaging equipment and hence the payload to be carried. An alternative approach

is vision guided descent where the onboard imaging equipment associated with the lander progressively refine the terrain image and correct the

lander’s trajectory to identify a suitable landing spot, thus translating the problem to the realm of vision guided control.

Experiments on vision guided landing of toy quadcopters have been successful and is a relatively easier problem as the control algorithm

is not constrained by the limitation that the lander’s displacement can only be towards the planet during descent, unlike a quadcopter, and thus

movements in X-Y direction can be achieved along specific X,Y,Z trajectories depending on the lander’s velocity. This is a tricky job

and requires fast computation with timing and computing power constraints. Thus, this is much different from a quadcopter landing

problem and encompasses several open areas of research like development optimization for processing power and the constraints imposed by the

quantum of change that can be handed by the image processing algorithm on the trajectory control algorithm etc. to name only a few, and thus

links the problem to the realm of High Performance Computing (HPC). Seamless recovery from a processing element failure with migration of

data is another aspect of this problem intimately linked to HPC.

The talk introduced the problem, the motivation of the HPC approach to solve the same and then moved on to identify the processing

elements, connectivity technology and algorithms spanning diverse domains such as image processing, HPC and Embedded Systems and finally

brought out the possibilities of a collaborative research initiative.

InvasIC Seminar, October 5, 2017 at FAU: Applying Model-Driven Engineering in the co-design of Real-Time Embedded Systems

Prof. Marco Wehrmeister (Federal University of Technology - Parana)

This talk presented methods and techniques applied in the co-design of real-time embedded systems, specifically those that are implemented as a System-on-Chip (SoC) that includes components with reconfigurable logic (FPGA). The target application domain is automation systems. The main objective is to discuss techniques and methods that use high-level abstractions, such as UML/MARTE models and concepts of the Aspect-Oriented Software Development (AOSD), for an integrated co-design addressing both software and hardware design. To this end, introduce model-driven engineering (MDE) techniques were introduced combined with separation of concerns in the handling of functional and non-functional requirements. Automatic transformations between models allow the information specified in different high-level modeling languages to be integrated and shared within the (co-)design of the hardware and software components. To illustrate such transformations, code generation techniques were presented for software components (e.g., java and C / C++) and hardware (VHDL) applied in a case study that represents a real application. Results indicate that the abstraction increase obtained by using MDE and the separation of concerns leads to an improvement in the reuse and adaptation of software components. Thus, by applying these ideas in the design of hardware components in FPGA, one can obtain similar benefits.

click here for the video version with slides

InvasIC Seminar, July 14, 2017, at TUM: Learning-Based Models for Power- and Performance-Aware System Design

Prof. Andreas Gerstlauer (University of Texas at Austin, USA)

Next to performance, power and energy efficiency is a key challenge in computer systems today. Fundamentally, energy efficiency is achieved by reducing computational overhead or effort through specializations or approximations. Both require tight co-design of application-specific architectures. Traditionally, slow simulations or inaccurate analytical methods are used to perform corresponding optimization. In the first part of this talk, I will present our work on fast yet accurate alternatives for early power and performance estimation to support hardware and software design and optimization. In the past, we have pioneered semi-analytical source-level and host-compiled simulation techniques. More recently, we have studied approaches that employ advanced machine learning techniques to synthesize models that can accurately predict power and performance of a target platform purely from characteristics obtained while running an application natively or in a fast functional simulation on a different host. We have developed such learning-based approaches for both hardware accelerators as well as software on CPUs. In the second part of the talk, I will further discuss our work on employing a variety of modeling approaches to design energy-efficient systems through hardware specializations and approximations. We have designed domain-specific accelerators that achieve orders of magnitude improved power/performance efficiencies for dense linear algebra operations, which are at the core of almost all scientific, signal processing and machine learning applications. Furthermore, we have developed systematic and automated methods for design and synthesis of accelerators that employ a range of hardware approximations to support novel types of quality-energy tradeoffs during system exploration.

InvasIC Seminar, July 12, 2017, at TUM: Data-Driven Resiliency Solutions for Integrated Circuits and Systems

Prof. Krishnendu Chakrabarty (Duke University, USA)

Design-time solutions and guard-bands for resilience are no longer sufficient for integrated circuits and electronic systems. This presentation described how data analytics and real-time monitoring can be used to ensure that integrated circuits, boards, and systems operate as intended. The speaker first presented a representative critical path (RCP) selection method based on machine learning and linear algebra that allows us to measure the delay of a small set of paths and infer the delay of a much larger pool of paths. In the second part of the talk, the speaker focused on the resilience problem for boards and systems; we are seeing a significant gap today between working silicon and a working board/system, which is reflected in failures at the board and system level that cannot be duplicated at the component level. The speaker described how machine learning, statistical techniques, and information-theoretic analysis can be used to close the gap between working silicon and a working system. Finally, the presenter described how time-series analysis can be used to detect anomalies in complex core router systems.

InvasIC Seminar, May 5, 2017 at FAU: Designing autonomic heterogeneous computing architectures: a vision

Prof. Donatella Sciuto (Politecnico di Milano)

The resources available on a chip such as transistors and memory, the level of integration and the speed of components have increased dramatically over the years. Even though the technologies have improved, we continue to apply outdated approaches to our use of these resources. Key computer science abstractions have not changed since the 1960's. Operating systems and languages we use were designed for a different era. Therefore, this is the time to think a new approach for system design and use. The Self-Aware computing research leverages the new balance of resources to improve performance, utilization, reliability and programmability.

The main idea is to combine the massively parallel heterogeneous availability of computational resources to autonomic characteristics to create computing systems capable to configure, heal, optimize, protect themselves and improve interaction without the need for human intervention, exploiting capabilities that allow them to automatically find the best way to accomplish a given goal within the specified resources (power budget and performance).

What we are envisioning is a revolutionary computing system that can observe its own execution and optimize its behavior with respect to the external environment, the user desiderata and the applications demands. Imagine providing users with the possibility to specify their desired goals rather than how to perform a task, along with constraints in terms of energy budget, time, and results accuracy. Imagine, further, a computing chip that performs better, according to a set of goals expressed by the user, the longer it runs an application. Such architecture will allow, for example, a hand-held radio to run cooler the longer the connection time, or a system to perform reliably by tolerating hard and transient failures through self healing. This characteristic will not be provided from the outside as an input to the device, as it happens nowadays in system upgrading, but it would rather be intrinsically embedded in the device, according to the target objective and to the inputs from the external environment. This will make such devices particularly well suited for applications of pervasive computing/control among which, for instance, mobile computing systems, adaptive secure infrastructures. The talk presented how autonomic behavior combined with adaptive hardware technologies will enable the systems to change features of their behavior in a way completely different than nowadays systems upgrading, where the new system behavior is defined by the human design effort. Behavior adaptation will rather be intrinsically embedded in the device, and will be based on the target goals and the inputs coming from the external environment.

The resources available on a chip such as transistors and memory, the level of integration and the speed of components have increased dramatically over the years. Even though the technologies have improved, we continue to apply outdated approaches to our use of these resources. Key computer science abstractions have not changed since the 1960's. Operating systems and languages we use were designed for a different era. Therefore, this is the time to think a new approach for system design and use. The Self-Aware computing research leverages the new balance of resources to improve performance, utilization, reliability and programmability.

The main idea is to combine the massively parallel heterogeneous availability of computational resources to autonomic characteristics to create computing systems capable to configure, heal, optimize, protect themselves and improve interaction without the need for human intervention, exploiting capabilities that allow them to automatically find the best way to accomplish a given goal within the specified resources (power budget and performance).

What we are envisioning is a revolutionary computing system that can observe its own execution and optimize its behavior with respect to the external environment, the user desiderata and the applications demands. Imagine providing users with the possibility to specify their desired goals rather than how to perform a task, along with constraints in terms of energy budget, time, and results accuracy. Imagine, further, a computing chip that performs better, according to a set of goals expressed by the user, the longer it runs an application. Such architecture will allow, for example, a hand-held radio to run cooler the longer the connection time, or a system to perform reliably by tolerating hard and transient failures through self healing. This characteristic will not be provided from the outside as an input to the device, as it happens nowadays in system upgrading, but it would rather be intrinsically embedded in the device, according to the target objective and to the inputs from the external environment. This will make such devices particularly well suited for applications of pervasive computing/control among which, for instance, mobile computing systems, adaptive secure infrastructures. The talk presented how autonomic behavior combined with adaptive hardware technologies will enable the systems to change features of their behavior in a way completely different than nowadays systems upgrading, where the new system behavior is defined by the human design effort. Behavior adaptation will rather be intrinsically embedded in the device, and will be based on the target goals and the inputs coming from the external environment.

click here for the video version with slides

InvasIC Seminar, May 3, 2017, at TUM: Bringing Dynamic Control to Real-time NoCs

Prof. Rolf Ernst (TU Braunschweig)

In many new applications, such as in automatic driving, high performance requirements have reached safety critical real-time systems. Static platform management, as used in current safety critical systems, is no more sufficient to provide the needed level of performance. Dynamic platform management could meet the challenge but usually suffers from a lack of predictability and simplicity needed for certification of safety and real-time properties. This especially holds for the Network-on-Chip (NoC) which is crucial for both performance and predictability. In this talk, we proposed the introduction of a NoC resource management controlling NoC resource allocation and scheduling. Resource management is based on a model of the global system state. We provided a protocol and a real-time analysis providing worst-case guarantees for control, NoC communication, and memory access timing. It supports mixed critical systems with different QoS requirements and traffic classes. The protocol uses key elements of a Software Defined Network (SDN) separating the NoC in a (virtual) control and a data plane thereby simplifying dynamic adaptation and real-time analysis. The approach is not limited to a specific network architecture or topology. Significant improvements compared to static NoC scheduling were demonstrated.

InvasIC Seminar, May 3, 2017, at TUM: Potential Impact of Future Disruptive Technologies on Embedded Multicore Computing

Prof. Theo Ungerer (Universität Augsburg)

There is an ever growing need of current and new applications for increased performance in IoT, embedded computing, but also for mid-level and high-performance computing. Because of the foreseeable end of CMOS scaling, new technologies are under development, as e.g. die stacking and 3D chip technologies, Non-volatile Memory (NVM) technologies, Photonics, Resistive or Memristive Computing, Neuromorphic Computing, Quantum Computing, Nanotubes, Graphene, and Diamond Transistors. Some of these technologies are still very speculative and it is hard to predict which ones will prevail. The technologies will strongly impact the hardware and software of future computing systems, in particular the processor logic itself, the (deeper) memory hierarchy, and new heterogeneous accelerators. As disruptive technologies offer many chances they entail also major changes from applications and software systems through to new hardware architectures. One challenge for the Computer Science community is to develop flexible models for the upcoming disruptive technologies to face the problem that it is currently not clear which of the new technologies will be successful. The talk gave an overview about the on-going roadmapping efforts within the EC CSAs Eurolab-4-HPC. The Eurolab-4-HPC roadmap targets a long-term roadmap (2022-2030) for High-Performance Computing (HPC). Because of the long-term perspective and its speculative nature, the roadmapping effort started with an assessment of future computing technologies that could influence HPC hardware and software. An assessment of the technologies and its potential impact (state of August 2016) was described in the Report on Disruptive Technologies for Years 2020-2030 and the Eurolab-4-HPC preliminary roadmap itself. The talk discussed the potential impact of such Disruptive Technologies on future computer architectures and system structures for embedded and cyber-physical systems, and servers.

InvasIC Seminar, March 17, 2017 at FAU: Game-theoretic Semantics of Synchronous Reactions

Prof. Michael Mendler (University of Bamberg)

The synchronous model of programming, which emerged in the 1980ies and has led to the development of well-known languages such as Statecharts, Esterel, Signal, Lustre, has made the programming of concurrent systems with deterministic and bounded reaction a routine exercise. However, validity of this model is not for free. It depends on the Synchrony Hypothesis according to which a system is invariably faster than its environment. Yet, this raises a tangled compositionality problem. Since a node is in the environment of the every other node, it follows that each node must be faster than every other and hence faster than itself!

This talk presents a game-theoretic semantics of boolean logic defining the constructive interpretation of step responses for synchronous languages. This provides a coherent semantic framework encompassing both non-deterministic Statecharts (as per Pnueli & Shalev) and deterministic Esterel. The talk sketches a general theory for obtaining different notions of constructive responses in terms of winning conditions for finite and infinite games and their characterisation as maximal post-fixed points of functions in directed complete lattices of intensional truth-values.

The synchronous model of programming, which emerged in the 1980ies and has led to the development of well-known languages such as Statecharts, Esterel, Signal, Lustre, has made the programming of concurrent systems with deterministic and bounded reaction a routine exercise. However, validity of this model is not for free. It depends on the Synchrony Hypothesis according to which a system is invariably faster than its environment. Yet, this raises a tangled compositionality problem. Since a node is in the environment of the every other node, it follows that each node must be faster than every other and hence faster than itself!

This talk presents a game-theoretic semantics of boolean logic defining the constructive interpretation of step responses for synchronous languages. This provides a coherent semantic framework encompassing both non-deterministic Statecharts (as per Pnueli & Shalev) and deterministic Esterel. The talk sketches a general theory for obtaining different notions of constructive responses in terms of winning conditions for finite and infinite games and their characterisation as maximal post-fixed points of functions in directed complete lattices of intensional truth-values.

click here for the video version with slides

Events 2016

InvasIC Seminar, December 13, 2016 at TUM: A Novel Cross-Layer Framework for Early-Stage Power Delivery and Architecture Co-Exploration

Prof. Yiyu Shi (University of Notre Dame)

With the reduced noise margin brought by relentless technology scaling, power integrity assurance has become more challenging than ever. On the other hand, traditional design methodologies typically focus on a single design layer without much cross-layer interaction, potentially introducing unnecessary guard-band and wasting significant design resources. Both issues imperatively call for a cross-layer framework for the co-exploration of power delivery (PD) and system architecture, especially in the early design stage with larger design and optimization freedom. Unfortunately, such a framework does not exist yet in the literature. As a step forward, this talk provides a run-time simulation framework of both PD and architecture and captures their interactions. Enabled by the proposed recursive run-time PD model, it handles an entire SoC PD system on-the-fly simulation with <1% deviation from SPICE. Moreover, with a seamless interaction among architecture, power and PD simulators, it has the capability to simulate benchmarks with millions of cycles within reasonable time. A support vector regression (SVR) model is also employed to further speed up power estimation of functional units to millions cycle/second with good accuracy. The experimental results of running PARSEC suite have illustrated the framework’s capability to explore hardware configurations to discover the co-effect of PD and architecture for early stage design optimization. Moreover, it also illustrates multiple over-pessimism in traditional methodologies. For example, by capturing the closed-loop PD and system interaction, the peak-to-peak noise shows 10% reduction, with potentially 7% power saving.